A fintech startup launched a sleek AI assistant to help users manage subscriptions.

The interface was clean, the branding tight, and early traffic surged.

But conversions? Nearly flat.

Users abandoned the experience halfway, and nobody could explain why. There were no heatmaps to study or button click data to analyse. Just chatbot transcripts and a growing list of silent drop-offs wasis what they could see.

The reason: They were measuring a talking interface with silent metrics.

In 2025, as conversational UX replaces traditional UI, product teams face a critical shift: they no longer design screens. They design experiences that speak.

Most importantly, they need to measure interaction, not navigation.

This blog breaks down the UX metrics that actually matter for AI-powered interfaces, from chatbots to voice assistants, and how forward-thinking teams can gain clarity, not confusion, from their conversational flows.

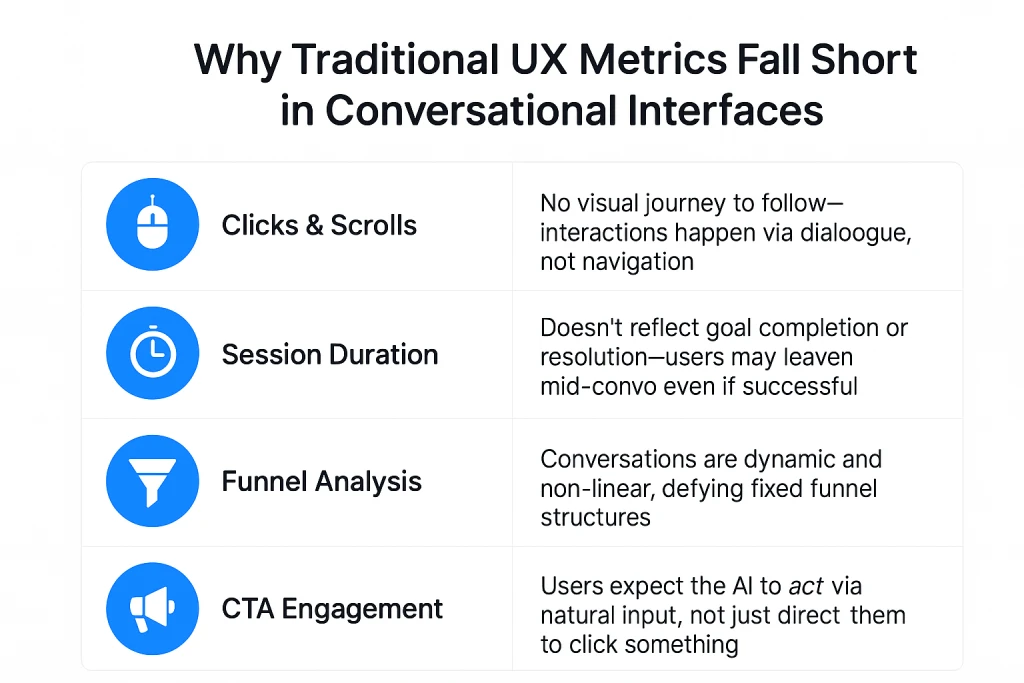

Why Traditional Metrics Fail in AI-First UX

Traditional UX metrics like click-through rate, bounce rate, and time on page were made for screens, not conversations. They track movement, not meaning.

But conversational UX is different. It’s fast, fluid, and context-driven. Users don’t navigate—they interact. In this shift, usability takes a backseat to understanding.

Here’s how traditional metrics fall short—and what you should measure instead:

In conversational UX, the KPI is no longer usability—it’s understanding.

The UX Metrics That Matter in Conversational Interfaces in 2025

1. Intent Recognition Accuracy:

- Definition: How accurately the system identifies a user’s request or goal.

- Why It Matters: Misinterpreting intent leads to failed experiences.

- Track It With: Natural Language Understanding (NLU) benchmarking tools, confusion matrices.

- Target Benchmark: >85% accuracy in mature models.

2. Time-to-Resolution (TTR):

- Definition: The Time it takes from user input to successful task completion.

- Why It Matters: Measures efficiency and friction in AI workflows.

- Ideal Range: <30–60 seconds for routine interactions.

3. Dialog Completion Rate:

- Definition: Percentage of conversations where users finish what they started.

- Why It Matters: High completion = high usability and flow clarity

- Tooling: Conversation analytics (e.g., Dashbot, Voiceflow)

4. Correction Loop Rate:

- Definition: Frequency of users repeating or rephrasing their input.

- Why It Matters: High correction = low clarity or poor NLU performance.

- Optimization: Prompt refinement, better training data.

5. Fallback Frequency:

- Definition: Rate at which the AI fails to respond accurately or defaults to “I didn’t understand.”

- Why It Matters: Indicates training gaps or poor conversational design.

- Acceptable Range: <5% in optimized systems.

6. Post-Interaction Sentiment:

- Definition: User satisfaction after completing a conversation.

- Why It Matters: Captures emotional response that is key in retention and loyalty.

- Tracking Methods: NLP-based sentiment scoring, quick user feedback.

Common Blind Spots in Conversational UX

- Multi-Intent Conversations

Users don’t stick to one goal—they ask follow-ups, switch contexts, or layer requests. Most bots fail to track or adapt to this fluidity.

- Context Retention

Can your system remember what was said two messages ago? If not, users will feel unheard and start over—or worse, drop off.

- Cross-Device Continuity

Conversations may start on mobile, continue on desktop, and end via voice. Few systems track experiences across platforms cohesively.

- Latency Blind Spots

Even a 2-second delay feels clunky in a chat. AI response time is UX. Yet many teams ignore this simple, trackable friction point.

- Over-Indexing on Task Completion

Completion doesn’t equal satisfaction. Was the interaction smooth? Was the language natural? Was the user confident in the response?

Why This Matters More Than Ever:

In 2025, AI interfaces are your product. You’re not just designing for interaction—you’re designing for trust, speed, and clarity. And if you can’t measure those outcomes, you can’t improve them.

Users expect seamless, human-first dialogue. And that means measuring whether:

- They feel understood

- They reach their goal without frustration

- The system adapts intelligently

Anything less is guesswork.

BlendX: Designing for Measurable Impact

At BlendX, we specialize in Agentic UX—human-first design that empowers AI to respond, and understand.

We don’t just design interactions. We build measurable, scalable experiences across chat, voice, and AI-driven platforms. Our clients get UX that is:

- Predictable to manage

- Measurable by design

- Scalable without friction

It’s time to measure what matters if you’re building conversational interfaces.

Partner with BlendX. Let’s turn your AI into a product users trust.